SLURM

UHD uses the software SLURM Workload Manager to manage jobs on the cluster. SLURM is an open source, fault-tolerant, and highly scalable cluster management and job scheduling system for large and small Linux clusters. SLURM was formally known as Simple Linux Utility for Resource Management. SLURM is used my many of the world's supercomputers and computer clusters. This page describes how to submit and manage jobs on the cluster using SLURM, assuming you have access to the cluster. If you require access, please see the "Getting Started" page for more details.

The table below shows the list of commands and descriptions of the mostly used SLURM commands on the head node of our cluster. These commands can queue up a job or show information pertaining to a job previously queued.

| Command | Description |

|---|---|

| sbatch <filename.sh> | Submit a batch script to SLURM for processing |

| squeue | Show information about you job(s) in the queue |

| scancel <job-id> | Ends or cancels a queued job |

| sacct | Shows information about current and previous jobs |

- Understanding SLURM

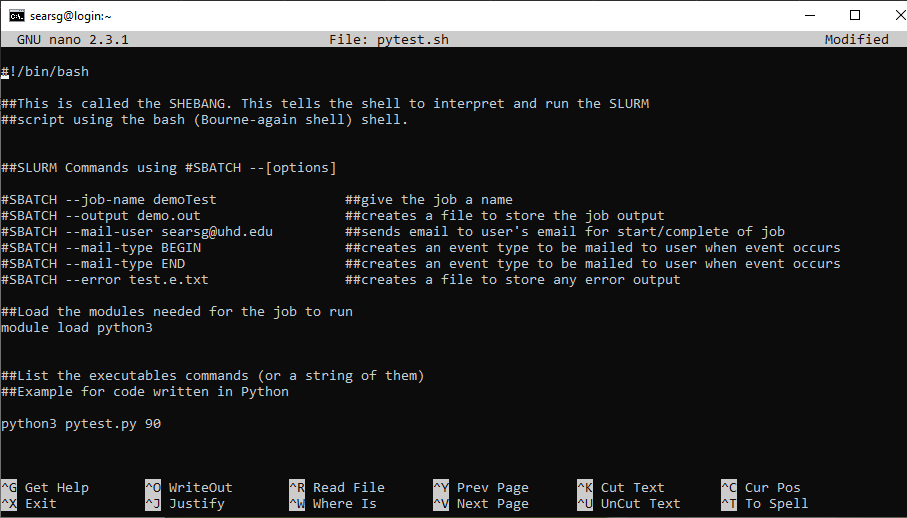

In our Bash Shell page, we went over on how to create a script and showed some SLURM commands. The #SBATCH command in our script, is what communicates to the job scheduler its parameters. Lines that begin with #SBATCH in all caps is treated as a command by SLURM. In order to comment out a SLURM command, you will need to append a second pound sign # to the SBATCH command (#SBATCH means SLURM command, ##SBATCH means comment).

There are four main parts in creating a SLURM script. These are mandatory if you want to successfully submit a job the scheduler. Here we have a simple script that is setup to show the structure of building out the script and the correct usage of some of the SLURM commands.

- The SHEBANG as described in our Bash Shell page is the command that tells the shell to interpreter and run the SLURM script using the Bourne-again shell(bash). This should always be the first line in the script.

- The Resource Request section of the script is to set the specific resources the job requires to run on the compute node. This will tell SLURM to set the name of the job using --job-name demoTest, or to create an output file with any name using --output demo.out. Make sure to always add this command to your script --error test.e.txt and label it whatever name you want. This creates an error file if any errors were to trigger.

- Dependencies is the section where you will load all software required for the job to run. In our example script we use the command module load python3. This will load the module python version 3 to interpret our python code to run on the cluster.

- Job Tasks is the last section of the script. This is where you will list the tasks to be carried out. In our example script we call the python3 module to start and then run the task of our python code with an input of 90.

The resource request section of the script is called the SBATCH Directives. the SBATCH lines contain directives for setting up the parameters of the job being submitted. Below is a list of common SBATCH directives used on UHD's HPC Cluster.

Command Description --job-name Specifies a name for the job allocation. The default is the name of the batch script if not specified --output Instructs SLURM to connect the batch script’s standard output directly to the filename. --partition Requests a specific partition for the resource allocation (gpu, interactive, normal). If not specified, the default partition is normal. --ntasks This option advises the SLURM controller that job steps run within the allocation will launch a maximum of number tasks and offer sufficient resources. --cpus-per-task Advises the SLURM controller that ensuing job steps will require ncpus number of processors per task. Without this option, the controller will just try to allocate one processor per task. --mem-per-cpu This is the minimum memory required per allocated CPU. Note: It’s highly recommended to specify --mem-per-cpu, but not required. --time Sets a limit on the total run time of the job allocation. The acceptable time format is days:hours:minutes:seconds. --mail-user Defines user who will receive email notification of state changes as defined by --mail-type. --mail-type Notifies user by email when certain event types occur. Valid type values are BEGIN, END, FAIL. The user to be notified is indicated with --mail-user. To learn more about the SLURM commands to use in your script, please visit SchedMD Command Summary page or type $man sbatch | less.

- Submitting a Job

Now that you have written your submission script and have a script written in the programming language of choice such as Python, how do you execute it using SLURM?

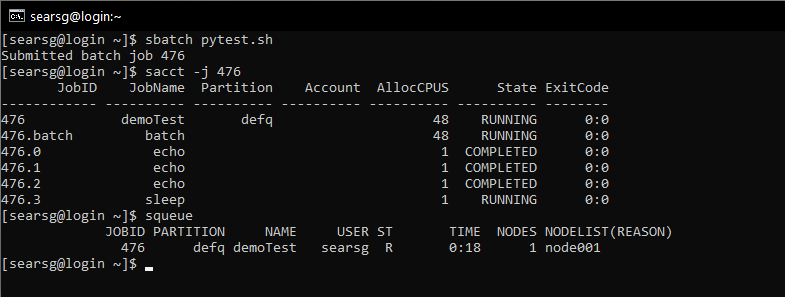

The sbatch command is used to submit the job to the scheduler. In the terminal, make sure you have your submission script and the python script in the same location. Type in the command $sbatch filename.sh

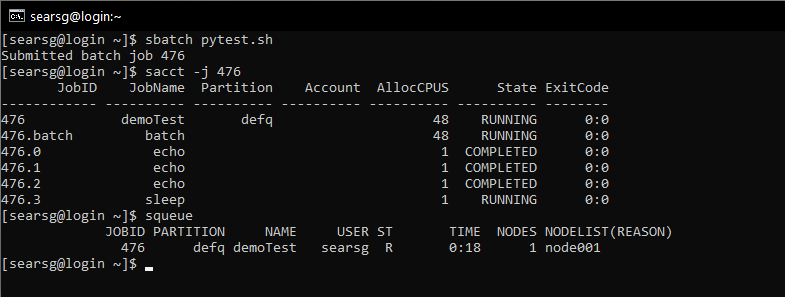

The command used was sbatch pytest.sh. This submitted the pytest.py script with a parameter of 90 that was shown previously in the submission script. Once the job is submitted to the scheduler, it is placed in the queue and given a JOB ID. In this submission it was 477. Once you have submitted the job to the cluster, you can then use some SLURM commands to gather valuable information regarding your job.

- Showing Information on Jobs

After submitting your script to the workload manager SLURM, now you can view the information of the job running or completed. This information is very useful when looking for specific data.

- SACCT

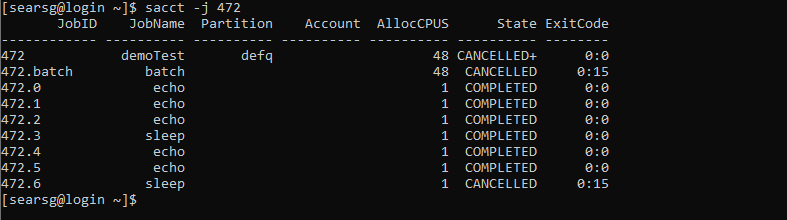

The sacct command displays information about all the jobs and job steps that are logged in the SLURM accounting log. The displayed information is jobs and job steps, status of the job, and exit codes by default. You can tailor the output with the use of the different parameter options to specify the fields to be shown. To see a specific job, you will type in the command $sacct -j job ID.

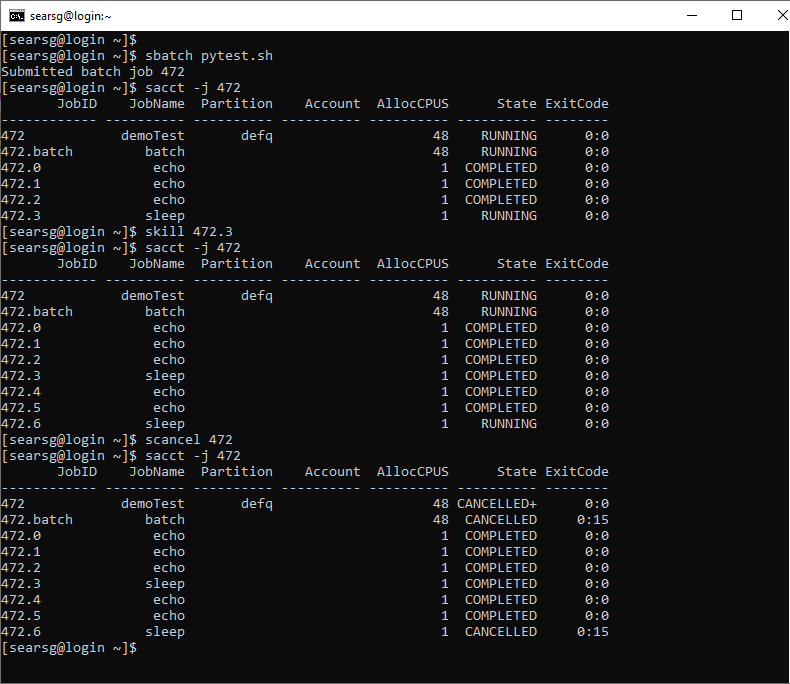

You can get information on running and completed jobs by passing the jobID as a parameter. Here, the command sacct -j 472 is used to show information about 472's completed job. This shows information such as: the partition your job executed on, the account, and number of allocated CPUS per job steps. You can also see information on state or status of the job as well as the exit code.

The first column describes the job IDs of the executed or completed job. Rows 1 and 2 are default job steps, with the first row shows the job script being executed and the second being the SBATCH directives being executed. The third row shows 472.0 which contains the information about the first process which ran using srun. Assuming if there are more srun commands the sub job IDs would increment as follows 472.1, 472.2, 472.3, ..., ect.

For more details about using the sacct please use the $man sacct command.

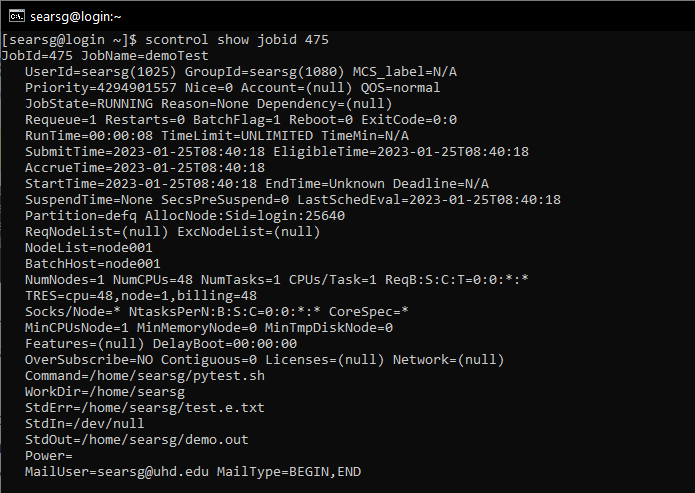

- SCONTROL

The scontrol is used to view information on a job that is in the running state. This detailed information is useful for troubleshooting. To run the command, type in $scontrol show jobid [jobid]

For more details about using the scontrol please use the $man scontrol command.

- SQUEUE

The squeue command is useful for viewing the status of jobs in the queue managed by SLURM. If you want to see a list of all jobs in the queue, you can use the $squeue command. This will reveal a list of all the jobs queued on the partition you are authorized to access.

For more details about using the squeue please use the $man scqueue command.

- SACCT

- Terminating Jobs

Sometimes there is a situation that you will need to cancel a job that has been submitted. The scancel command or skill is used to kill or end the current state(Pending, running) of your job in the queue.

NOTE: Please note that a user can't delete jobs of another user

When using the scancel or skill command, make sure you use the correct job ID number. Whenever you submit the batch job you will get a job ID. If you happen to forget or didn't see the job ID, you can check which job is running by typing $sacct.

For more information on the SLURM Workload Manager, please visit SchedMD or you can use the command $man slurm to get information as well.